[Editor’s note: this is the third in a series on Internet-of-Things security. You can find the introductory piece here and the prior piece here.]

This was going to be an article about authentication. In putting it together, I realized that there are a number of fundamental concepts that can help the authentication discussion. Some of us tend to toss out words related to those concepts rather freely – and tentatively, perhaps, because if we were pushed hard, we’d have to admit that we don’t understand the details. And this is especially true in security, where those details tend to accrue only to a select few.

So if you’re a security whiz, what follows will all be familiar stuff. If you’ve dealt with browser security, it may also be familiar – because much of Internet of Things (IoT) security is based on existing technology, like TLS. But the IoT is bringing a lot of new folks into the game, and for them (or should I say, “us”), this stuff isn’t obvious.

Authentication is a dance involving the exchange of various artifacts. Describing those artifacts in an authentication article was creating a TL;DR piece, so I’ve broken it up. Exactly how that dance is choreographed will be the topic of the next article; here we’re going to focus on three critical artifacts that can take on numerous roles in IoT security: keys, signatures, and certificates.

Keys to the Kingdom

When sending a secret message, having the key for decoding the message is fundamental. Ideally, only you and your intended recipient have the key so that no one else can break into the message.

But there’s this problem that’s almost re-entrant: when you start up communication with some other node and you want the traffic to be encrypted, then you need to send them a key. But, simplistically thinking, you don’t want to send a key in plain text – anyone could read it and have access to your conversation. So you want to encrypt the message that contains the key, except that… how will the recipient read the key if they need that key – which they haven’t read yet – to decrypt the key?

This scenario might apply with so-called symmetric keys, where both ends have what are supposed to be secret, or private, keys. You wouldn’t want to email a key over in that case because you couldn’t encrypt it; you would need to install such private keys in a more secure fashion, like loading off of a secure dongle in a controlled environment (frankly, even that doesn’t sound very secure). Which is why this isn’t used for IoT-like installations; there would be no practical way to do that for every possible connection on every device out there.

The other thing about private keys is that all of the components using them tend to have the same keys. That means that, if the key is compromised, then all nodes using that key are in trouble – not just the compromised node. Replacing them all with a new key becomes yet another hassle (and devices are at risk – or down – until all keys are replaced).

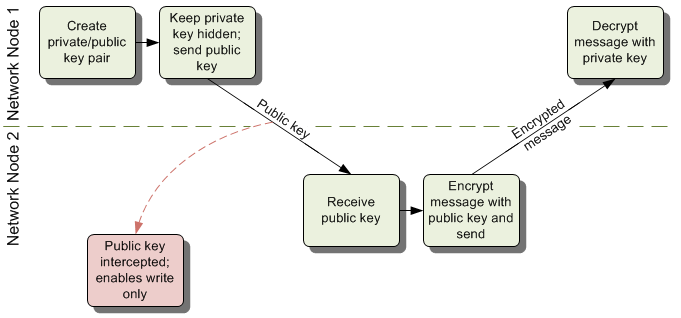

Asymmetric keys are more typical for the IoT; the SSL/TLS in your browser relies on this as it connects to random supposedly-secure websites. The idea here is that, instead of one key, there are really two mathematically related keys. A network endpoint will generate this key pair, and it will keep one of them; this is the private key. The other key will be sent to the other end of the communication link; it’s the public key (and anyone can see it – that’s why it’s called “public”).

We’re not going to deal with the math here (that’s for a future discussion), but here’s the important part:

- You use the public key to encrypt a message. In other words, when you send the other end a public key, you’re saying, “If you want to send me something secret, encrypt it with this key. Then only I can read it.”

- You use the private key for decoding messages you receive. This works specifically because of the specialized math used to generate the key pair. By definition, unless you’ve been compromised, no one else has that key, so no one else can read the messages encrypted with the associated public key.

In other words, if someone gets ahold of the public key, it won’t let them read the messages – only the private key can be used for reading – and that key is (hopefully) protected (also more on that in a future installment).

In a key exchange, two nodes give each other a public key to work with. The keys will be different, meaning that a sending node wouldn’t be able to read a message it just encrypted. (Of course, it knows the contents, because it created the message.) Once encrypted, only the receiving node can read the message.

Keys can also be ephemeral. Unlike a secret private key exchange, where the same keys are used on an ongoing basis, new keys can be generated for each new connection. That limits exposure if one of those keys is compromised (although it’s a relative thing – significant damage can occur before any compromise has been detected).

So that protects someone from reading your secrets. But, equipped with your public key, some lurking third party can still send you encrypted messages – and they might be bogus. Even with the original key exchange, you don’t really know whom the key is from. This is where signed certificates come in.

Signatures and Certificates

There are two separate notions in that last statement: “signed” and “certificate.” Anything can be signed, and here we have the reverse of the “write/encrypt with public key, read/decrypt with private key” rule above. For signing, you hash a message and then perform some math on it with your private key; the recipient can then use the public key to read the hashed value. The signature is appended to the actual message.

Having unlocked the hash result, and having the message to which the signature was appended, the recipient itself can then run the same hash algorithm on that message and confirm that its calculated hash matches the hash in the signature. If they do, then the message contents have not been tampered with by someone other than the sender (most highly probably – nothing is guaranteed 100%).

So that’s a helpful thing; it tells you that message integrity is preserved. But it still doesn’t prove to you who sent the message. You need a certificate for that. It consists of various pieces of information about the sender, and it will be signed by… someone. It will certainly be signed by you, but will anyone trust just you? This is where we get to the whole trust issue that we covered a couple of weeks ago. You need a chain of trust that goes back to someone that can be unquestioningly trusted.

As mentioned in that piece, you can “self-sign” certificates if you think people will trust you. But here we get into some nuance with respect to the IoT. IoT internet traffic may be for one of two purposes. In one case, information may be requested by a browser or other app for presentation to someone on a computer or phone. So a standard browser may be the application requesting information. In other cases, the traffic may simply be machine-to-machine (m2m) communication, with no browser involved.

The reason this makes a difference is that browsers typically want to see a chain of trust that terminates with a known certificate authority (CA), like Verisign. Your browser comes with certain certificates built in, and it looks for these in secure connections.

If you self-sign for a browser, then you need to get your users to add that certificate to your browser – probably not a user-friendly requirement. If that doesn’t happen, then the browser will balk every time it tries to talk to your system, saying, “Whoa, dude, this place looks shady…” You can tell your users to go ahead and click past that, but the optics are suboptimal, to say the least.

There apparently is a way to become a “private CA,” but again, most of the browsers in the world have never heard of you, so that doesn’t really work for a broad distribution. It’s most effective for things like internal testing in a limited environment where you can control the whole network; you can then avoid the costs of a CA while testing, without dealing with the browser hassles.

So, for browser interaction, you realistically need to include a CA in the chain of trust. If that certificate is seen in the message, then the browser will be happy. For non-browser m2m traffic, however, both ends of the communication can behave any way they want. For a tightly restricted, walled-garden approach, a company can easily self-sign because it owns all points in the network. In a world of greater interoperability, with different network elements from different, unrelated companies, keeping a well-known CA in the chain of trust is probably a good idea.

So let’s summarize. When opening a link between two entities in the network, each end sends a public key to the other end, including a signed certificate that proves the legitimacy of the key. I know, that was a lot of words to get to that point, but… that seems to be a common thread with security: nothing is ever simple or straightforward. There’s always an angle that you didn’t think of. (Which is why crackers remain in business…)

One last note on certificates: they both expire and can be revoked if compromised. Revocation can happen only once it’s discovered that someone cracked a private key (and not necessarily before harm occurs). The associated certificate is then posted on a list of revocations that browsers and other systems can check. It’s like cashing that cashier’s check, which, by definition, is supposed to be unquestionably legit – but your bank still calls the originating bank to make sure the check isn’t fraudulent.

Expiration is useful first because it means that there’s a definite date after which revocations can be taken off the list (or else they’d have to stay up forever). The other use of expirations is that of security technology aging. All encryption technology is eventually replaced by newer, stronger techniques. Perhaps longer keys or different key-generation algorithms. Having the certificate expire forces the owner to review current standards when renewing. It might be time to upgrade servers or at least generate a new, better key.

CA-originated certificates tend to last longer (and you pay for that). Certificates from entities farther down the trust chain tend to expire sooner. We’re talking on the order of 1-5 years here. You can make your own certificate expire whenever you want, but if you have it expire after the root certificate (or any other certificate in the chain) does, it won’t matter, because when one element in the chain expires, then the chain is no longer valid.

And with those artifacts – keys, signatures, and certificates – in place, we can proceed to a discussion of authentication.

[Editor’s note: the next article in the series, on authentication, can be found here.]

Do you use security artifacts other than keys/signatures/certificates? Or variations on that theme?

Good explanation

Some interesting follow-up discussion going on here: https://www.linkedin.com/grp/post/6931120-6042732168600444932

In particular, the browser consideration for using CA certificates may not apply…