[Editor’s note: this is the second in a series on Internet-of-Things security. You can find the introductory piece here.]

So you’re buying a car. I don’t know how it works in other countries, but in the US, it’s one of the least favorite purchases someone can make. Partly it’s because it’s our only haggling purchase, and, as buyers, we’re not used to haggling, so we’re not skilled at it. That aside, all too often, when the deal is done, buyers come away feeling like there’s a good chance they’ve been owned in the process.

Let’s start with the sales guy that slithers out of the office when you walk onto the premises. You’re probably being approached by half a dozen sales folks, like so many hungry zombies, each wanting to be the first to you but not wanting to look over-eager or to turn it into an outright race. One guy wins and you’re stuck with him. Granted, he (or she; we’ll assume he for our purposes) may seem to be a very nice person, and he’ll work hard to earn your trust.

But when it comes to doing the deal, you’ve got to put a number out there that’s less than the asking price. Your sales guy will be the first indication of how your proposal is received. Inevitably, your opening move will be problematic for them, for all kinds of reasons. Is he giving you the straight scoop? Can you believe what he’s saying?

Perhaps you work a provisional deal with him and he goes in to get manager approval. Is he really haggling on your behalf with the manager? Or is he going to watch a few minutes of The Price Is Right while you fidget nervously in the cubicle?

Perhaps he comes back with a counter-offer from the manager. Did the manager really write the counter-offer? There’s a signature, but is that really the manager’s signature? You’ve never seen her signature before, so it could be anyone signing the name. Heck, you don’t really know the manager’s name.

Perhaps it comes with an invoice showing the cost to the dealer. It’s on manufacturer’s letterhead. But is the number really right? Or do they have a stack of letterhead to print any old thing on? Even if it’s right, does it really represent cost, or does the manufacturer have an inflated cost for this purpose, with a debit-back program after the car is sold?

In short, do you trust the sales guy? Do you trust the manager? Do you trust the manufacturer? Do you trust any of the artifacts they present as authentic and accurate? The reason so many people come away feeling had is that a crucial ingredient is missing from the transaction: trust.

When it comes to electronic device security, at some point, you’ve got to have some trust, or else you might as well pack it up and go home. And if you trust a piece of hardware or software, it will be because of one of two reasons: either you have direct proof that the thing is trustworthy, or you have indirect attestation, and you trust the entity providing the attestation.

In the former case, you can think of this as a Root of Trust. It’s a fundamental kernel that you never question (or it’s the very last thing you question). In the latter case, you have a chain of trust. Entity A endorses Entity B endorses Entity C endorses whatever you’re questioning. You can trace the provenance back to a Root of Trust, and then you’re satisfied.

While rummaging around for information on trusted entities, I noticed that there are really two notions of trust, and standards or environments are evolving for both. One type of trust is used for engaging with others: it forms the basis of authentication (which we’ll cover separately). It’s a machine-to-machine trust thing.

The other is trust within a machine: how do I know that a complex set of software capabilities is rock-solid and not being compromised by some ne’er-do-well? We’ll look at them separately.

I Yam What I Yam

So you’ve got this Internet of Things (IoT) edge node that needs to join the network. Why should the network let you on? Or you’ve got some accessory that plugs into your IoT edge node. Why should your edge device accept the accessory when it’s plugged in?

Yes, authentication is a process for executing the Secret Handshake and whispering the Magic Password, but how do you know that the guy whose hand you’re shaking is the real deal and not someone who tortured the real deal to get the handshake and password and is now masquerading as the real deal?

There are a variety of artifacts – keys, certificates, whatever – that are passed back and forth when authenticating. Are they real? Have they been spoofed? How can you trust that they’re legitimate? Well, you can trust them if you trust the environment that produced them.

And this is where we introduce one fundamental trust notion: hardware is trusted more than software. In general, software can be monkeyed with in real time. Hardware – not so much. And a piece of hardware that has been thoroughly and publicly vetted enjoys the highest level of trust.

One well-known example is the Trusted Platform Module (TPM), created by the Trusted Computing Group. It’s a somewhat opaque (perhaps translucent is better) special-purpose microcontroller used for managing artifacts that need to be trusted. It can be used to generate keys, for one – the cryptographic functions are handled internally in hardware.

But certifying that a system is trustworthy involves more than just handing keys back and forth. You really want to be sure that nothing has compromised the system. When you trust the system, you’re trusting, among other things, that critical boot files and other fundamental resources haven’t been tampered with.

So the TPM contains a number of registers used for storing information about the system. One example of a contributor to this structure is the Integrity Measurement Architecture, which allows you to specify a list of resources to be validated. So at boot-up (or at other system-critical times), this list of files is checked, typically generating a signature, and that signature can be validated against the expected signature stored in the TPM.

Of course, that means you need to be able to take a known-clean system and load up the TPM. System configuration information can be loaded into the Platform Configuration Registers (PCRs), but it’s not simply a matter of writing data into the registers. Data is hashed before storage, and adding more data often involves a notion of “extending” the PCR – taking the current value, adding more info, and hashing that.

Because the TPM is well known and well attested, it can form a root of trust for authentication. “How do I know your key is legit?” “Because I got it from the TPM.” “Ah, ok, cool. Come on in…”

Maintaining a Clean House

The second notion of trust doesn’t involve interaction with another system, but aims to ensure that one’s own system isn’t being hijacked. The IMA is actually a piece of that, ensuring that mission-critical parts of the environment haven’t been compromised, but that’s just the start.

You can put in all the safeguards you want, but here’s the deal: modern systems are complex. And getting more complex. And complexity expands an attack surface, providing more things to protect in the system and more opportunities for attackers because, hey, there’s no way you can think of everything.

This argues in favor of simple, plain-vanilla systems. Particularly ones with true, non-reprogrammable ROM boot code. Because even though boot code is software, ROM is hardware, and you can’t monkey with ROMed boot code.

If you have a simple image locked in ROM that you bring in and run over bare metal or a simple RTOS, then there’s less opportunity to break in and cause mayhem. And if a problem does materialize, then a reboot should provide a fresh restart.

The problem is, this security comes at an expense: the system you ship will remain invariant – you can’t update it because all the software is in ROM. (If you have some way to extend by updates in flash, you’ve now violated the all-ROM assumption and provided a way for someone to monkey with the code.) Most systems nowadays need updating. In fact, agile methodologies and the race to market mean that IoT devices are being shipped with a starting set of features that are likely to be upgraded over time. So the ROM/single image model has limited applicability.

Devices offering more complex graphics and other capabilities are more likely to run a “rich” operating system (OS) like Linux. And this brings you back to complexity. And you now have the notion of a “process” – the OS doesn’t intrinsically restrict what can run; in theory, any process can be created. That means that, in addition to the risk of existing processes being contaminated, outright new processes can be launched – ones that weren’t shipped with the system.

In particular, such processes (or modifications to other processes) could play mischief with critical system resources – communications, storage, etc. In theory, a system could offer up a public sandbox under the assumption that only invited guests will play, or perhaps that it’s a nice neighborhood, so everyone is welcome, and we’re sure that they’ll all play nicely together.

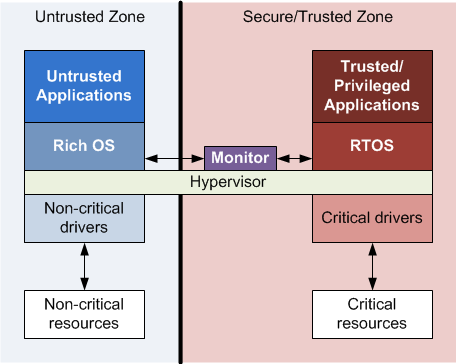

Which we know isn’t true. So this is where internal trust comes in, and I’ve run across two levels of security architecture to handle this. At the lowest level is the Trusted Execution Environment (TEE) from GlobalPlatform. Riding more broadly above this are technologies like ARM’s TrustZone or Imagination Technology’s OmniShield. The latter systems encompass TEE concepts but also provide protection for bus transactions and other system elements.

The TEE has the notion of a simple, certified RTOS kernel that controls the family jewels. A small number of validated, trusted applications can run over this kernel. Everything else runs on a “rich” OS like Linux. The rich-OS programs cannot access critical resources directly; they have to pass those requests off to the TEE for execution.

You then end up with (at least) two levels of privilege: trusted (referred to as “Secure World” with TrustZone) and untrusted (referred to as “Normal World” with TrustZone). Normal, untrusted programs cannot access protected resources directly; they have to go through a trusted monitor that can enforce policies to ensure that only legitimate resource requests are honored.

Critical for this, then, especially with multicore platforms, is virtualization. You may have one core assigned to the RTOS, with Linux apps virtualized across the other cores. Or you may have both of the OSes virtualized across all of the cores. The monitoring function may be performed by the hypervisor. And when it comes to this virtualization, this is another place where hardware is preferred to software.

Some processors come with hardware virtualization support. Without that, context swaps involve such details as flushing the cache, hurting performance. But software is also less trusted than hardware, so hardware structures that can store context information, allowing quick context swaps, will give better performance and let the designer sleep better.

Hardware-assisted virtualization has been around for a while. Intel and AMD processors have it, as do ARM, MIPS, and Power Architecture (remember them?) processors. So this isn’t a rare feature – but it is a question if you’re putting the most minimal bit of hardware onto, say, a door lock. TrustZone, for example, is a Cortex-A thing. If you’re using Cortex M, you may need to fall back to a simpler approach. Then again, if you’re using Cortex M, you’ve probably got a less rich and complex system – a good thing from a security standpoint.

How do we know you’re to be trusted?

So you’ve got this root of trust and perhaps some trusted applications and a trusted hypervisor. How do you know that you trust them? Or perhaps you do trust them; how, then, do you get your users to trust them? That’s where certification comes in.

And this brings us to yet another organization: the Common Criteria for Information Technology Security Evaluation (referred to as Common Criteria, or CC). They’ve set up a framework by which you can have your system evaluated.

At the simplest, this is a process of specifying a set of claims about your system. In other words, this isn’t strictly about testing your system against a fixed standard that everyone follows; you make claims as to what your system can do, and then it’s tested against that.

There is also an Evaluation Assurance Level (EAL) that specifies how exhaustively your system will be tested (from 1 to 7). This doesn’t have anything to do with security levels – a device tested with EAL 7 isn’t necessarily more secure than one tested with EAL 1, but, because it has had more tests thrown at it, you can have greater confidence in the EAL 7 testing results.

CC can certify labs that will then do evaluations. This is a chain of trust at its most basic: CC forms the root, with testing houses being trusted by virtue of CC, and then with tested devices being trusted by virtue of the house that tested it.

On some systems, like PCs, it’s possible for users to add trusted programs – you get certificates and then go through a process that tells Windows to trust that program. This is sort of a reverse trust thing. In most cases we’re talking about the machine trying to convince you that it’s trustworthy. In the Windows example, it’s you telling the machine that a program is trustworthy.

We’ve taken naught but a high-level look at these concepts. We’ll not go into boot sequence details, for example, necessary to establish chain of trust as the system comes up. But there lots of details that go into establishing which parts of the system can be trusted and which can’t. It’s not that the untrusted parts are inherently bad or malicious; it’s just that it hasn’t been worth the effort to certify them, and they can manage alongside a TEE.

The untrusted software is also likely to be updated more often and more blithely; the trusted components would not be changed out nearly as easily without being triple and quadruple sure that the new version is a trustworthy replacement for the old trusted one and not a Trojan horse masquerading as an update in order to attain trusted status.

Once you have trust, you have the world. You can much more easily protect your own system and you can demonstrate to other systems that you can be trusted. We’re likely to see much more of this as IoT security solutions mature, but implementing trust mechanisms in extremely resource-constrained devices is something I haven’t seen yet.

That said, in most discussions of the TEE, for example, the domain of trust is a system-on-chip (SoC). If ultra-low-cost applications have their own SoCs selling at high volumes, then the economics of trust may ease up.

Finally, the day we see zones of trust implemented in car dealerships will be the day that trusted interactions will have finally come into their own.

[Editor’s note: the next article in the series, on security artifacts, can be found here.]

More info:

Imagination Technologies’ OmniShield

How does your security setup establish trust, especially if it’s a small device?

And the TEE needs to be completely transparent — From tool chain that compiles it, source codes for everything (tool chain, libraries, and product), and the verifiable binaries that are produced.

Trust starts with the transparency that anyone, especially the end customers, can audit the entire build process and resulting system (or commission a 3rd party audit) and verify the build matches what is in the end IoT device.

This doesn’t require everything be open source in the traditional sense, but it does require at minimum that the source licenses for the device and tool chains are included with the product, AND along with ALL later updates.

This doesn’t mean there may not be a back door trojan hidden carefully in the system … it does mean that people are encouraged freely to look for them. And when they are found, safely removed without abandoning the product.

Security by obscurity, isn’t security at all.

http://cd.textfiles.com/hackchron/CUD1/CUD107D.TXT

An important aspect of the picture you draw here is that it is multi-party: chip vendor, processor IP provider, OS/RTOS provider, hypervisor provider, stack source (open?), and applications.

I won’t comment if Benjamin Franklin’s quote about secrets and the number of people needed to keep it applies to Trust, but that is something to consider nowadays.

Regarding auditing by end customers, that’s going to depend mightily on who that end customer is. If we’re talking home automation, there’s no way your average customer is going to do that (they wouldn’t know how, nor could they interpret artifacts). So they’re going to have to trust others to do that for them.

So there’s another link in the trust chain, perhaps? System comes out, technical reviewers go at it, doing whatever level of audit they do, and then give thumbs up or down. If those reviewers are trusted by end users (rightly or wrongly), they become part of the chain of trust – and end users make decisions based on those reviews.

@bmoyer — that is certainly one way to get it done in the private sector, another is to have homeland security tasked with making security audits. The difficult part is when devices have a remote update feature, as the trojan may not be in the device, until much later after passing security reviews.

The device could only update with a trojan on demand, like after a rogue attacker flies a drone over the targets building and triggers an update all those IoT devices with trojans … security reviews would never find it.

Home automation clearly has different and relatively lower/insignificant overall direct risks. Except maybe where governments, rogue businesses, and organized crime are specifically targeting high value individuals or their employers.

Factory automation, large businesses, devices used in high security environments, and any application that could cause significant financial disruption, well that’s a VERY different and significant risk.

The Sony hack tells us a lot about what can be done with just computer accessible data.

Taking that one step up, relatively simple devices like remotely controlled light switches that contain covert audio/video surveillance could be extremely useful for a number of different rogue parties … especially if they are deployed widely in government officials, and significant businesses employees homes. Combine that with the personnel data China just stole, and it might become the last link necessary to effect blackmail or locate an unstable employee with the right position for theft of secrets. Install them everywhere, for a wide surveillance network.

The little integrated chip of the remotely controlled light switch could actually contain enough memory to record a year or more of conversation in the room, accessible on demand when an individual becomes targeted. None of this easily detectable RF wireless mic stuff … just drive by or send a drone over the business or house every few months to collect the data when wanted. Or have a voice to ascii processor with targeted search algorithm built in, and have it reach out when certain trigger phrases are found.

A billion of these little $0.25 subsidized “bargain” trojan light switches could be a VERY effective data collection network. Especially if it contained an WiFi or Blue-tooth sniffer too.

In the end, the real question is, does the enhanced function/utility worth/require taking the risk the device becomes a trojan?