[Editor’s note: this is the fourth in a series on Internet-of-Things security. You can find the introductory piece here and the prior piece here.]

You’re in a hotel, dead tired from a long day of meetings. There’s a knock on the door; you ask who it is, and they respond, “Room service.” Perfect; you had no energy to go out, and so you ordered up to dine in the relaxed ease of your room. You let the server in; your dinner is set up; you give a nice tip; she leaves so that you can tuck into some comfort food. You lift the warmer, and…

You’re in a hotel, trying to catch up on sleep with an early to-bed regime after changing too many time zones. As you’re drifting off, there’s a knock on the door. “Who is it?” “Housekeeping.” Housekeeping? At 9 PM? You peer through the peephole; looks like someone from housekeeping. You open the door a slice, chain on, and ask for ID; ID is proffered up and looks legit (although you have no idea what it’s supposed to look like, so it could be fake). “Turn-down service. We’re running a bit late today; sorry.” Reluctantly, cautiously, you open the door, and…

You’re in a hotel, getting some work done on a day with no meetings. On the 23rd floor, you have a nice view over the city, the river, and surrounding parks. You have the window open for some fresh air; security isn’t exactly an issue this high up. A window washer comes gliding down on his platform, and, as you wonder whether you could ever do that job, the cleaner steps through the window, and…

You’re in a hotel; you’re there for a week. The third day in, you get a knock on the door: “Maintenance. Someone got the master key, and we have to redo all the locks. It’ll just take a minute; sorry for the inconvenience.” You let him in to do his work; he hands you a new key, and you continue on with your work. Late that night, as you’re sleeping, you wake to the sound of a key in your door. “Another drunken neighbor who can’t find the right room,” you think. Then the door opens, and…

What we have here is a failure to authenticate.

And it’s not always the fault of an overly trusting hotel guest. Yes, in some cases, there are simple things that could have provided better protection, like keeping the window closed. (But come on, on the 23rd floor? How paranoid can you be?) In many cases, however, the guest really has no means to authenticate. How is he or she supposed to tell a good ID from a bad one, for instance? In the movies, you can listen for the music to tell you whether or not to be worried; real life has no such helpful soundtrack.

Swap “network” for “hotel room” in the scenarios above, and we have four variants of someone trying to gain access to the network:

- Someone familiar and expected asks for access to the network. You’re likely to be trusting in this case.

- Someone unfamiliar and/or unexpected asks for access to the network. Now you’re not so sure whether trust is a good idea.

- You’ve protected the main network connection as the obvious way someone is going to come onto the network. You didn’t think about the little debug port off to the side that’s wide open.

- Your device finds that there’s a firmware update waiting. You download and install it.

Each of the hotel scenarios comes with risk. The “and…” at the end of each one suggests that things might have gone just fine, or results might have been disastrous. Not every knock on the door brings danger; in fact, most don’t. Problem is, in too many cases, it takes only one nightmare event to ruin your day. Or worse.

You may also think, “Hey, I have nothing of value in my hotel room. OK, so they come poke around; they’re not going to find anything useful.” Might be true, but maybe they don’t care about what’s in your room. Maybe what they’re interested in is that computer you have hooked up, connecting you to your company headquarters – with all its rich data – over a nice, secure VPN. By getting into your unimportant hotel room, they got into the important company mainframe.

Which is why Internet of Things (IoT) edge nodes are so vulnerable. On their own, they don’t seem to offer much in the way of value to some ne’er-do-well. But if your device is the miscreant’s way onto the network, well, there’s lots of value there.

When to Authenticate

In our previous article, we established three fundamental tools for authentication: keys, signatures, and certificates. How and when do we apply these? The simplest answer is, anytime anyone is asking for access to the network.

- That request may come over the internet connection. It happens to share the same physical port (Ethernet, WiFi, whatever) that gets you onto the private network as well.

- It could come through some other physical external port. Examples: a debug port or a USB port.

- It could come through a so-called “consumable.” The poster-child example of this is a printer ink cartridge. But not all consumables apply. For instance, those little coffee pods in a connected coffee maker wouldn’t necessarily need authentication. The difference is consumable connectivity. In the printer case, the printer needs to talk electronically to the cartridge – that’s how it prints. So there’s an electronic connection that could be compromised. With the coffee pod, there’s no electronic connection, so there’s no way the pod could get onto the network. The only reason for authenticating a coffee pod would be to enforce brand exclusivity, but monopolistic world domination [cue eerie demonic laughter] is not our topic for today, so we’ll let that slide. (That said, there are concerns that concepts like trusted computing might end up being used more for digital rights (over-) enforcement than for actual security, so it’s not an irrelevant consideration.)

Effective authentication means three things: (1) confirming that the access requestor is who it says it is, (2) that the requestor is authorized to join the network, and (3) that the requestor hasn’t been manipulated somehow.

An example would be at an ATM. The card is intended to indicate authority to get money (number 2); the PIN is intended to convey that you are the legitimate owner of the card (number 1) under the (hopefully correct) assumption that (a) only the rightful owner knows the PIN and (b) that it’s incredibly unlikely that someone could randomly guess the pin in a few tries. A final step – typically not done – would be for a camera to analyze the situation to see if the person at the ATM appears to be under the control of someone with a gun, forcing them to take out money. In that case, the requesting person has lost the required integrity to proceed (number 3).

All of this gets wrapped up in a pas de deux that we call authentication – although it bears noting that there’s a fair bit of potentially confusion terminology here, particularly with words starting with “a”:

- Authentication is the process of deciding whether someone is who they say they are.

- Authorization is the process of granting privileges to some authenticated entity.

- Attestation has to do with proving that data and software haven’t been monkeyed with.

Authentication can be one-way: only the network authenticates a new node. Or it can be two-way: the new node also authenticates the authenticating server on the network.

One approach to authentication

While client/server authentication via protocols like RADIUS have been around for a long time, letting you log onto the Internet from your computer, the question is how we can effectively do this on IoT edge nodes with fewer resources. That doesn’t necessarily mean replacing RADIUS, but rather extending its notions.

There’s no one right way to do it, but, just as the Trusted Platform Module we discussed in an earlier piece established one go-to model, so the same group has established a Trusted Network Connect (TNC) process for complete authentication.

There’s a lot of documentation covering this particular authentication scheme, and each page is awash in acronyms. There is also a fair bit of nuance that goes beyond what we have space and time for here. So I figured I’d abstract the essence of what’s going on; you can then build your own personal relationship with the spec if you want to dig deeper.

The overall authentication scheme involves three parts:

- User authentication: this involves a human and a password – and possibly multiple other factors like challenge questions or taking the user to a page where they can see a personalized image so they know they’re on the right site. We’ve all done this. Too many times. This is the only step that involves the user directly.

- Platform authentication: keys get exchanged and certificates get checked.

- Integrity verification: lots of little checks occur to make sure that the requestor isn’t in fact a body snatcher.

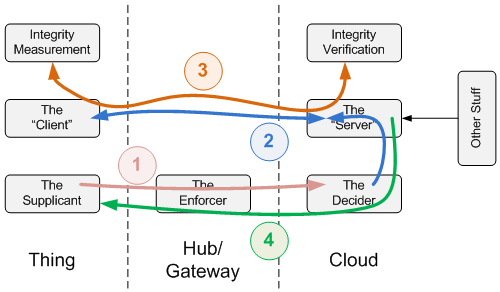

Each of the pieces of the diagram below is separate, even if it seems like they do similar things. For instance, the decision-making element is different from the “enforcing” element. Put simplistically, when you ask to get in, one guy decides yes or no, and that guy tells the enforcer guy who does the actual letting in (or not). The Enforcer guy is at the door (perhaps we should call him the Bouncer), and he gets a call from The Decider, who sits upstairs, as to whether or not you’re getting in. Physically, the decision may be made in a server somewhere in the cloud; the enforcement may happen through a gateway firewall.

The decision is based on the outcome of the three steps above as well as other information (“metadata”) that may be elsewhere. For instance, at the door, you may pass all three steps – you got the secret handshake right, all your papers are in order, and nothing seems to be counterfeit – so you should be able to get in, except that they just saw those pictures you posted from last weekend, and they’re not so excited about giving access to people like you. Policy has a big role here.

One super-simplified way of looking at it is in the following figure. It shows four basic steps, with the first three being the three elements above and the fourth one being communication of the final decision.

Step 1 shows user authentication. If that passes, then step 2, platform authentication, proceeds, where the Thing and Client exchange bona fides – keys and certificates for inspection. If that works ok, then the server and client get into a conversation in step 3 about what the server wants to see in the way of integrity proof or attestation. Messages get passed back and forth until all the questions have been answered. Finally, based on the results of that, plus any other relevant policy stuff (like those Facebook pictures), the Decider makes a Decision and lets the Enforcer and Supplicant know (step 4).

At this year’s IoT DevCon, a presenter from Cisco showed a demonstration done jointly with Infineon where they implemented authentication not just between Raspberry Pi-based endpoints, but at all points along the network (which, in this case, meant a router), some one-way, some two-way. Most endpoints had hardware trusted processing modules (TPMs); one had a software implementation (although their conclusion favored hardware).

They were able to track the details of the authentication to confirm that a legitimate node was able to join the network, and a fake endpoint was not. However, this was early work, partly to show that this stuff is more than ideas on paper, while at the same time soliciting input. So folks aren’t quite done choreographing this dance.

The focus of this process is, of course, to admit a new element onto the network. There’s one other aspect we have to consider for machines that are already authenticated and on the network: software updates. I’ve run across less specific information about how software updates can be authenticated, but the essence of it is that you want to be sure that the update is legitimate, rather than an attempt by someone to change how the device works – likely by weakening security.

One simple approach is to send the update in one message and then have a signed hash sent in a separate message. If the separate hash can be successfully opened with the public key, then the message has presumably come from the legitimate sender (since only that sender has the private key for signing data that can be opened with its corresponding public key). You can then hash the update to see if the hash result matches the signed hash. If it does, then it’s extremely likely that the update wasn’t corrupted (intentionally or otherwise) en route.

Do I have to do all of this stuff?

All of this is well and good, but it’s a lot of overhead for tiny IoT devices. If you’re running an 8-bit machine, you’re going to have a hard time doing the math required for elliptic curve cryptography, for instance. So… does this mean that you have to upgrade your entire platform just to enable authentication and other security measures?

I don’t know that this is settled. It’s clear that it is possible to compromise a network through something as trivial as a thermostat – there’s an existence proof for that. In addition, as we’ll see next time, there is a new cryptography math proposal undergoing vetting that apparently makes high security much more tractable on such small devices.

That aside, can you simply forego all of this hassle? I would think that the answer to that is, “No.” Which would mean that, unless this new math works, yeah, you might have to upgrade your platform to make sure that your little device doesn’t become an entryway into a major network. Hopefully, someday, this will be an intrinsic part of a ubiquitous security stack, and you won’t have to think about it.

But you may have other ideas, which we solicit below…

[Editor’s note: the next article in the series, on encryption, can be found here.]

More info:

TCG Infrastructure Working Group Reference Architecture for Interoperability (Part I)

TCG Infrastructure Working Group Architecture Part II – Integrity Management

TCG Trusted Network Connect TNC Architecture for Interoperability

How, if at all, do you envision doing authentication in small IoT edge nodes?

“If you’re running an 8-bit machine, you’re going to” cause yourself a LOT of problems … slower development with higher complications, and as noted above box yourself into a corner lacking both address space and cycles to implement necessary safe authentication, and very good crypto algorithms.

When a high performance SOC+memory is less than $5/ea in modest volume, even with a high volume product, the increased NRE+support will almost NEVER be paid back trying to save another dollar or two on the product.

Maybe on a project with the SOC embedded in an ASIC, but even then I’d be surprised.

And remember, if you can get to, or modify, the SOC data/code on it’s pins, or with an on chip debugger/ICE … your authentication and crypto is probably not secure, lacking a really trusted execution platform.